Introduction

In this post I’ll talk about my largest project in terms of time. It started in the middle of year 2000 as my Master thesis subject and ended up extending itself until around mid 2013 (although it remained in hibernation for almost 10 years in between). The project was an “automatic cell counter”. Roughly speaking, it is a computer program that takes an image with two kinds of cells, and tells how many of each kind of cell there is in the image.

At the time, my advisor was professor Adrião Duarte (one of the nicest persons that exists is in this planet). Also, I had no idea about the biology side of things, so I had a lot of help from Dr. Alexandre Sales and Dra. Sarah Jane both from the “Laboratório Médico de Patologia” back in Brazil. They were the best in explaining to me all the medical stuff. Also, they provided a lot of images and testing for the program.

The “journey” was an adventure. From publishing my first international paper, going to Hawaii on my first plane flight, down to asking for funding to buy a microscope in the local financing agency (FAPERN). Although this won’t be a fully technical post, the goal of the post will be to talk about the project itself and things related to it during the .

The problem

The motivation for the project was the need for cell counting in biopsies exams. The process goes more or less like this; When you need a biopsy of some tissue, the pathologist prepares one or more laminas with very very thin sheets of the tissue. The tissue in those laminas are coloured with a special pigment. That pigment has a certain protein designed to attach itself to specifics substances present in the cells that are behaving differently like ongoing division, for instance. The result is that the pigment makes the cells (actually the nucleus) to appear in a different colour depending on the pigment used. Then, we have means to differentiate between the two “kinds” of cells in the lamina.

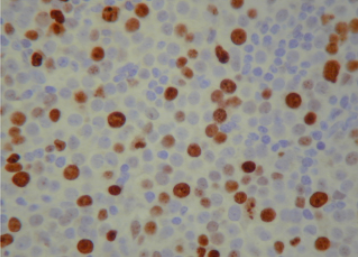

The next step is to count the cells and compute how many of them is coloured and how many are not. This ratio (percentage of coloured vs non-coluored) will be the result of the biopsy. The problem here is exactly the “counting” procedure. How the heck does one count individual cells? The straight forward way to do that is to put the lamina into the microscope, look into the objective and, patiently and with caution, start counting by eye. Here we see a typical image used in this process.

There are hundreds of nucleus (cells) in those kinds images. Even if you capture the image and count them in a screen, the process is tedious! Moreover, the result can’t be based on one single image. Each lamina can produce dozens or hundreds of images that are called fields. To give the result of an exam, several fields have to be counted.

There are hundreds of nucleus (cells) in those kinds images. Even if you capture the image and count them in a screen, the process is tedious! Moreover, the result can’t be based on one single image. Each lamina can produce dozens or hundreds of images that are called fields. To give the result of an exam, several fields have to be counted.

Nowadays, there are machines that do this counting but they are still expensive. At the time I did the project, they were even more expensive and primitive. So, any contribution to this area that would help the pathologist to do this counting more precisely and easily would be very good.

The App

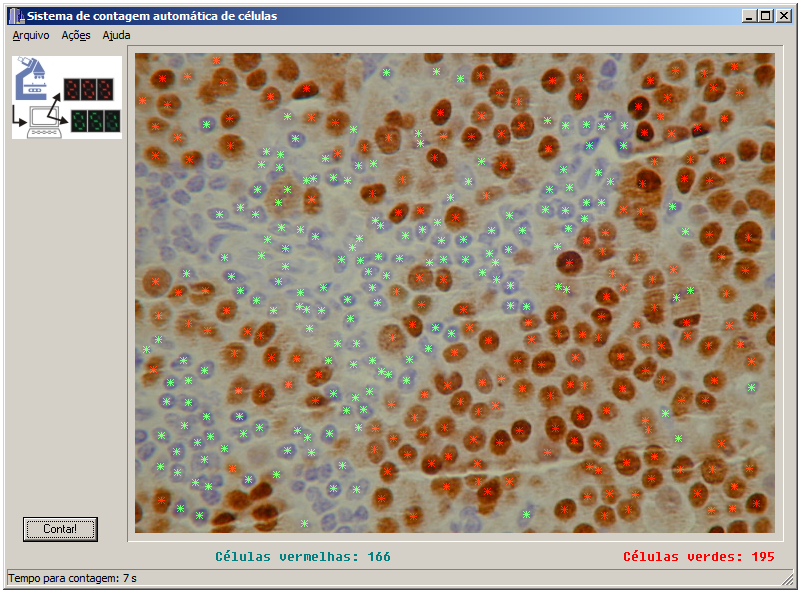

The solution proposed in the project was to make an application (a computer program at the time) that would do this counting automatically. Even at the time, it was relatively easy to put a camera into a microscope. Today it is almost a default 😄. So, the idea was to capture the image from the camera coupled in the microscope, give it as input to the program, and the program would count how many cells are in the image.

Saying it like this, makes it sound simple… But it is not trivial. The cells, as you can see in the previous sample image, are not uniformly coloured, there is a lot of artefacts in the image, the cells are not perfect circles, etc. Hence, I had to device an algorithm to perform this counting.

The algorithm is basically divided in 3 main steps that are very common in image processing solutions;

1 – Pre-Processing

2 – Segmentation

3 – Post-Processing

4 – Counting

Algorithm

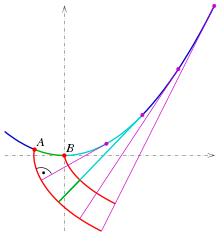

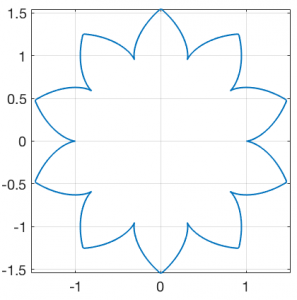

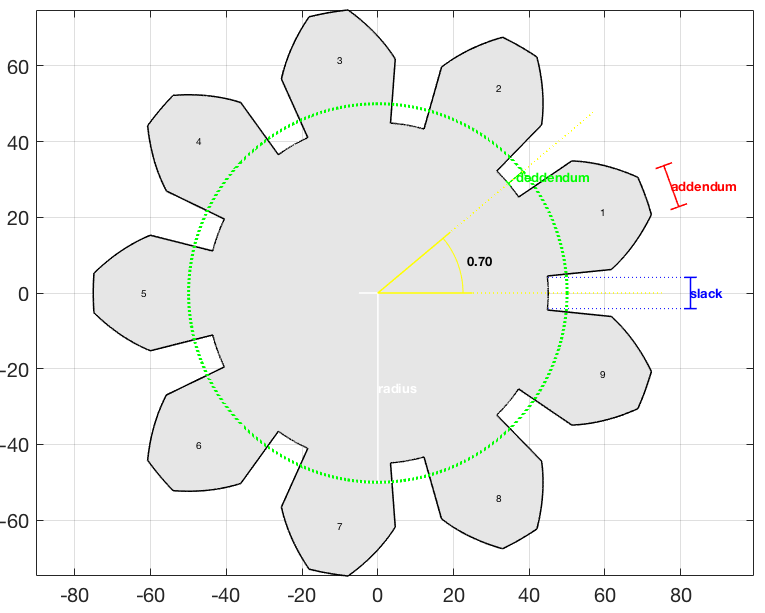

The pre-processing stage consisted in applying a colour transform and performing an auto-contrast procedure. The goal was to normalise the images for luminance, contrast, etc. The segmentation step consisted in label each pixel as one of three categories: cell 1, cell 2 or intra-cellar medium (non-cell). The result would be an image where each pixel is either 1, 2 or 3 (or any arbitrary triple of labels). After that comes the most difficult part, which was the post-processing. The segmentation left several cells touching each other or produced a lot of artefacts like tiny regions where there were no cells but was marked as a cell. Hence, the main scientific contribution of the project was to make use of mathematical morphology to process the segmented image and produce an image with only regular pixels in the cells. Finally we have the counting step which resulted in the number of cells labeled as 1 or 2. The technical details of all those steps are in the Masters document or in the papers (see references).

Old windows / web version

As the final product of the Masters project, I implemented a windows program in C++ Builder with a very simple interface. Basically the user opens an image and press a button to count. That was the program used by Dr. Alexandre in his laboratory to validate the results. I found the old code and made available in GitHub (see references)

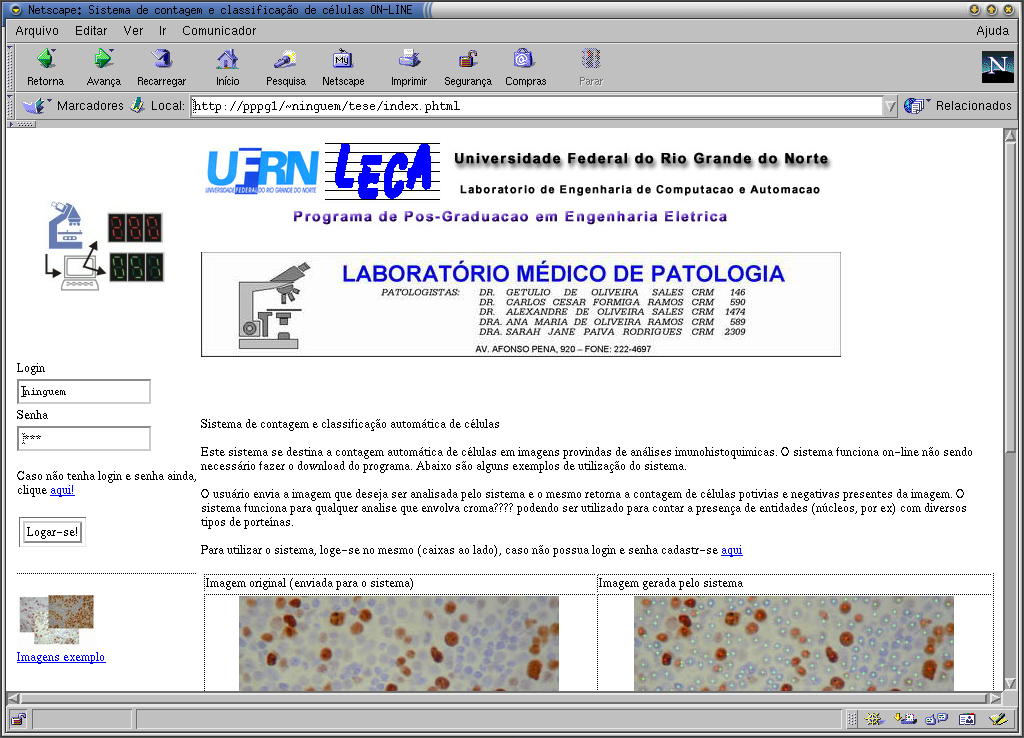

Together with the windows program, I also made an “on-line version” of the application. At the time, an online system that would count cells by uploading an image was a “wonder of technology”😂. Basically it was a web page powered by a PHP script that called a CGI-bin program that did the heavy duty work (implementation of the algorithm) and showed the result. Unfortunately I lost the web page and the CGI code. All I have now is a screenshot that I used in my masters presentation.

iOS

After the Masters defence, for a couple of months I still worked a bit to fix some small stuff and made some small changes here and there. Then, I practically forgot about the project. Dr. Alexandre kept using it for a while but no modifications were made.

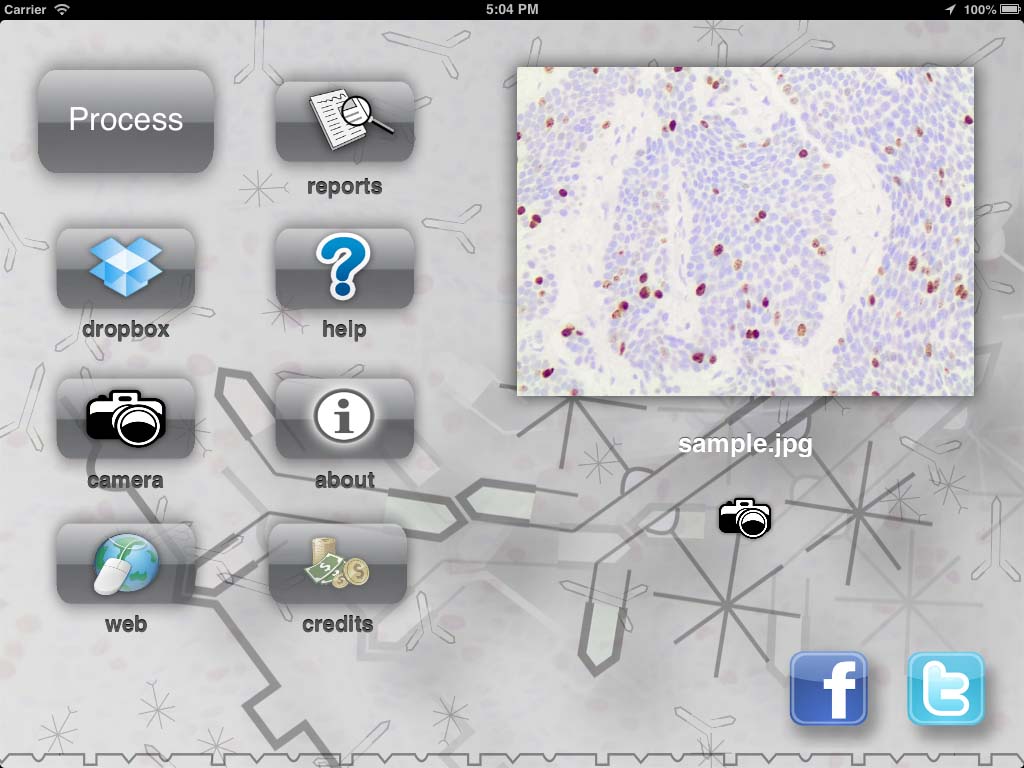

Almost 10 years later (around 2012), I was working with iOS development and had the idea of “porting” the program to a mobile platform. Since the iPhone (and iPad) have cameras, maybe we could use to take a picture directly in the objective of the microscope and have the counting right there! So, I did it. I made an app called PathoCounter for iOS that you could take a picture and process the image directly into the device.

The first version was working only on my phone. Just to be a proof of concept (that worked). Then, I realize that the camera on the phone was not the only advance that those 10 years of moore’s law gave me. There was a godzilion of new technology that I could use in the new version of the project! I could use the touch screen to make the interface easy to operate, and allow the user to give more than just the image to the app. The user could paint areas that he knew there were no cells. We could use more than the camera to take images. We could use Dropbox, web, the gallery of the phone, etc. We could produce a report right on the phone and share with other pathologist. The user could have an account to manage his images. And so on… So I did most of what came to my mind and end up with a kind of “high end” cell counting app and made it available in the Apple store. I even put a small animation of the proteins antigens “fitting” in the cell body 🤣. For my surprise, people started to download it!

At first I hosted a small web system where the user could login and store images so he could use within the app. I tried to obey some rules for website design but I’m terrible at it. Nevertheless I was willing to “invest my time” to learn how a real software would work. I even learned how to promote a site on facebook and google 😅.

However, I feared that if I had many users the server could not be robust enough, so I tried a crowdfunding campaign at indiegogo.com to buy a good computer to make a private server. For my surprise, some friends baked up the campaign and, in particular, one of my former students Victor Ribas donated $500 dollars! I’m still thankful to him and I was very happy to see such support!! Unfortunately the amount collected wasn’t enough to buy anything different form what I had, so I decided do donate the money to a local Children Hospital that treats children with cancer. Since the goal of the app was to help out with the cancer fight, I figure that the hospital was a good choice for the donation.

Sadly, this campaign also attracted bad audience. A couple of weeks after the campaign closed and I donated the money, I was accused (formally) of doing unethical fund-gathering for public research. Someone in my department filed a formal accusation against me, and, among some things, told that I was obtaining people’s money from unsuccessful crowdfunding campaigns. That hurts a lot. Even though it was easy to write a defence because I didn’t even kept the money, I had to call the Hospital I did the donation and ask for a “receipt” to attach as a proof in the process. They had offered a receipt at the time I made the donation, but I never imagined that two weeks later I would go though this kind of situation. So, I told them that wasn’t necessary because I was not a legal person or company, and I didn’t want to bother them with the bureaucracy.

Despite this bad pebble in the path, everything that followed was great! I had more the 1000 downloads all around the world. That alone was enough to make me very happy. I also decided to keep my small server running. Some people even got to use the server, although just a handful. Another cool thing that happened was that I was contacted by two pathologists outside Brazil; one from Italy and another from United States. The app was free to download and the user had 10 free exams as a demo. After that the user could buy credits, which nobody did 😂 (I guess because you just could reinstall the app to get the free exams again 😂). I knew about that “bug”, but I was so happy to see that people were actually using the app that I didn’t bother (although Dr. Alexandre told me that I was a “dummy” to not properly monetise the app 🤣).

The app was doing so much more than I expected that I even did some videos explaining the usage. Moreover, I had about 2 or 3 updates. They added some small new features, fixed bugs, etc. In other words, I felt like I was developing something meaningful, and people were using it!

Results

As I said, the main result to me was the usage. To know that people were actually using my app felt great. Actually, the girl from United States that contacted me said that they were using it in dog exams! I didn’t even knew that they did biopsy in animals 😅.

Analytics

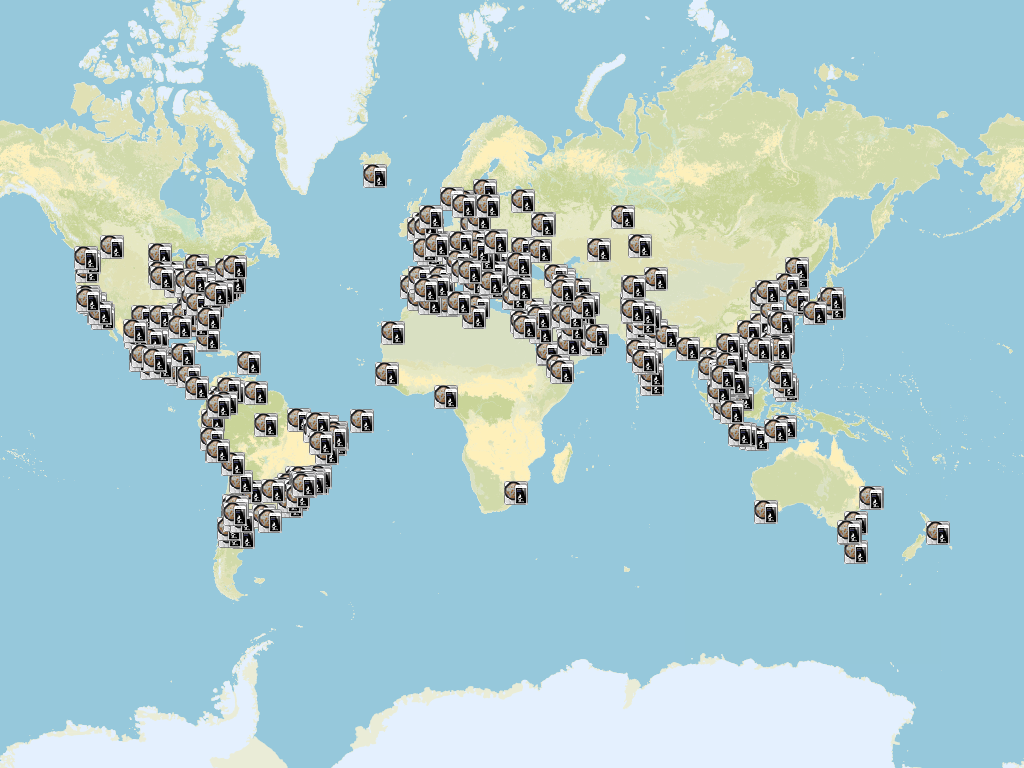

At the time I was into the iOS programming “vibe” I use to put a small module in all my apps to gather anonymous analytics data (statistics of usage). One of the data I gathered, when the user permitted of course, was the location were the app was being used. Basically every time you used the software, the app sent the location (without any information or identification of the user whatsoever) and I stored that location. After one or two year of usage, this was the result (see figure).

Each icon is a location where the app was used and the user agreed in sharing the location. The image speaks for itself. Basically all the continents were using the app. That is the magic of online stores nowadays. Anybody can have your app being used by, easily, thousands of people! I’m very glad that I lived this experience of software development 😃.

Media

Somehow the app even got the media’s attention. A big local newspaper and one of the public access TV channels here in my city, contacted me. They asked if they could write an article about the app and interview me about it 🤣😂. I told them that I would look bad in front of the camera but I would be very happy to talk about the app. Here goes the first page of the newspaper article in the “Tribuna do Norte” newspaper and the videos of the interviews (the TV Tribuna and TV Universitária UFRN).

Conclusion

I don’t know for sure if this app helped somebody to diagnose an early cancer or helped some doctor to prescribe a more precise dosage of some medicine. However, it shows that everyone has the potential to do something useful. Technology is allowing advances that we could never imagine (even 10 years before when I started my masters). The differences in technology from 2002 to 2012 are astonishing! From a Windows program, going through an online web app, to a worldwide distributed mobile app, software development did certainly changed a lot!

As always, thank you for reading this post. Please, feel free to share it if you like. See you in the next post!

References

Wikipedia – Immunohistochemistry

Sistema de Contagem e Classificação de Células Utilizando Redes Neurais e Morfologia Matemática – Tese de Mestrado

MARTINS, A. M.; DÓRIA NETO, Adrião Duarte ; BRITO JUNIOR, A. M. ; SALES, A. ; JANE, S. Inteligent Algorithm to Couting and Classification of Cells. In: ESEM, 2001, Belfast. Proceedings of Sixth Biennial Conference of the European Sorciety for Engineer and Medicine, 2001.

MARTINS, A. M.; DÓRIA NETO, Adrião Duarte ; BRITO JUNIOR, A. M. ; SALES, A. ; JANE, S. Texture Based Segmentation of Cell Images Using Neural Networks and Mathematical Morphology. In: IJCNN, 2001, Washington. Proceedings of IJCNN2001, 2001.

MARTINS, A. M.; DÓRIA NETO, Adrião Duarte ; BRITO JUNIOR, A. M. ; FILHO, W. A. A new method for multi-texture segmentation using neural networks. In: IJCNN, 2002, Honolulu. Proceedings of IJCNN2002, 2002.

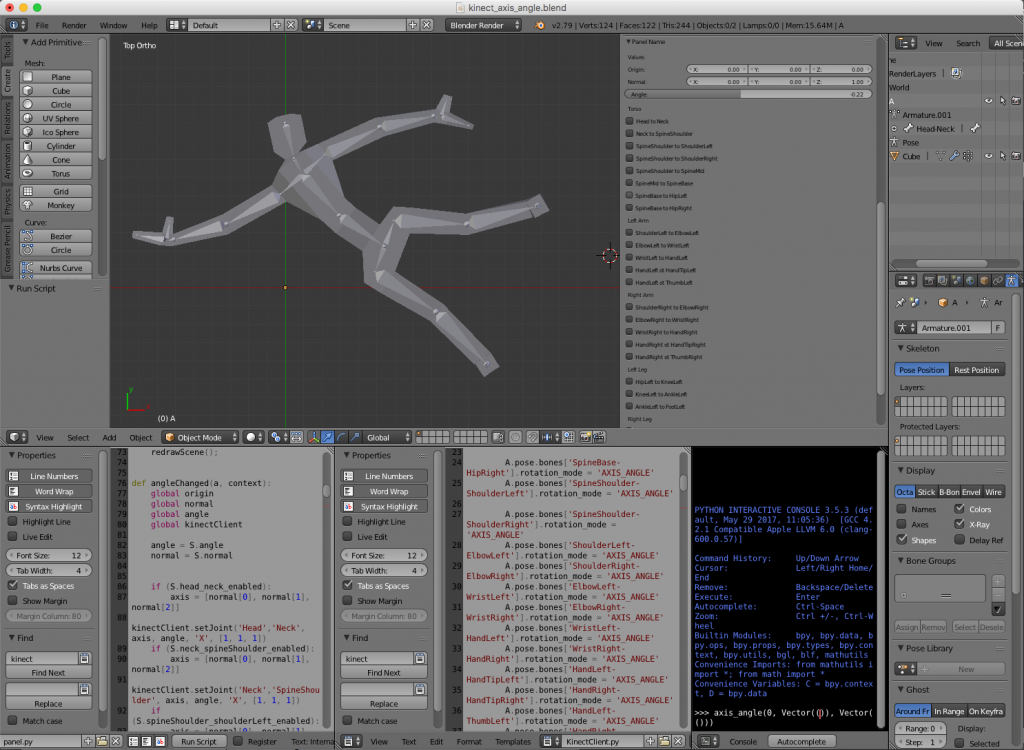

Hence, the blender file is composed basically of tree parts: the 3D body, the panel script and a Kinect interface script. The challenge was to interface the Kinect with python. For that I used two awesome libraries: pygame and pykinect2. I’ll talk about them in the next section. Unfortunately, the libraries did not like the python environment of blender and they were unable to run inside the blender itself. The solution was to implement a client server structure. The idea was to implement a small Inter Process Communication (IPC) system with local sockets. The blender script would be the client (sockets are ok in blender 😇) and a terminal application would run the server.

Hence, the blender file is composed basically of tree parts: the 3D body, the panel script and a Kinect interface script. The challenge was to interface the Kinect with python. For that I used two awesome libraries: pygame and pykinect2. I’ll talk about them in the next section. Unfortunately, the libraries did not like the python environment of blender and they were unable to run inside the blender itself. The solution was to implement a client server structure. The idea was to implement a small Inter Process Communication (IPC) system with local sockets. The blender script would be the client (sockets are ok in blender 😇) and a terminal application would run the server.

(source: https://www.sp.edu.sg/mad/about-sd/facilities/motion-capture-studio)

(source: https://www.sp.edu.sg/mad/about-sd/facilities/motion-capture-studio)

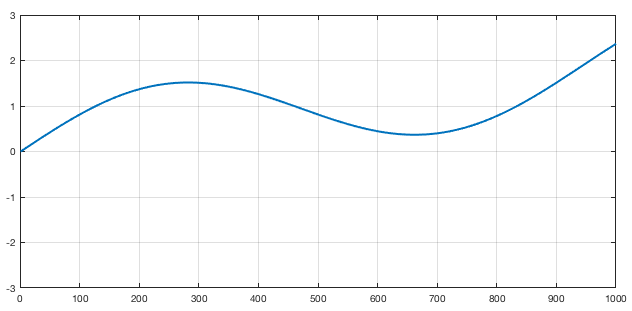

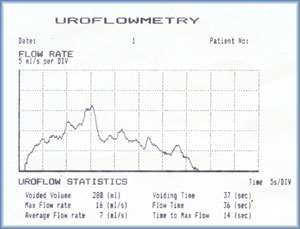

Figure 1 : Sample of a Uroflowmetry result[3]

Then, he prescribe a very common medicine to improve the results and told me that I would repeat the exam a couple of days later. After about 4 or 5 days I returned to the second exam. I repeated the whole process and peed on the USD $10,000.00 toilet. This time I did’t feel I did my “average” performance. I guess some times you are nervous, stressed or just not concentrated enough. So, as expected, the results were not ultra mega perfect. Long story short, the doctor acknowledge the result of the exam and conducted the rest of the visit (I’m fine, if you are wondering heheheh).

Figure 1 : Sample of a Uroflowmetry result[3]

Then, he prescribe a very common medicine to improve the results and told me that I would repeat the exam a couple of days later. After about 4 or 5 days I returned to the second exam. I repeated the whole process and peed on the USD $10,000.00 toilet. This time I did’t feel I did my “average” performance. I guess some times you are nervous, stressed or just not concentrated enough. So, as expected, the results were not ultra mega perfect. Long story short, the doctor acknowledge the result of the exam and conducted the rest of the visit (I’m fine, if you are wondering heheheh).

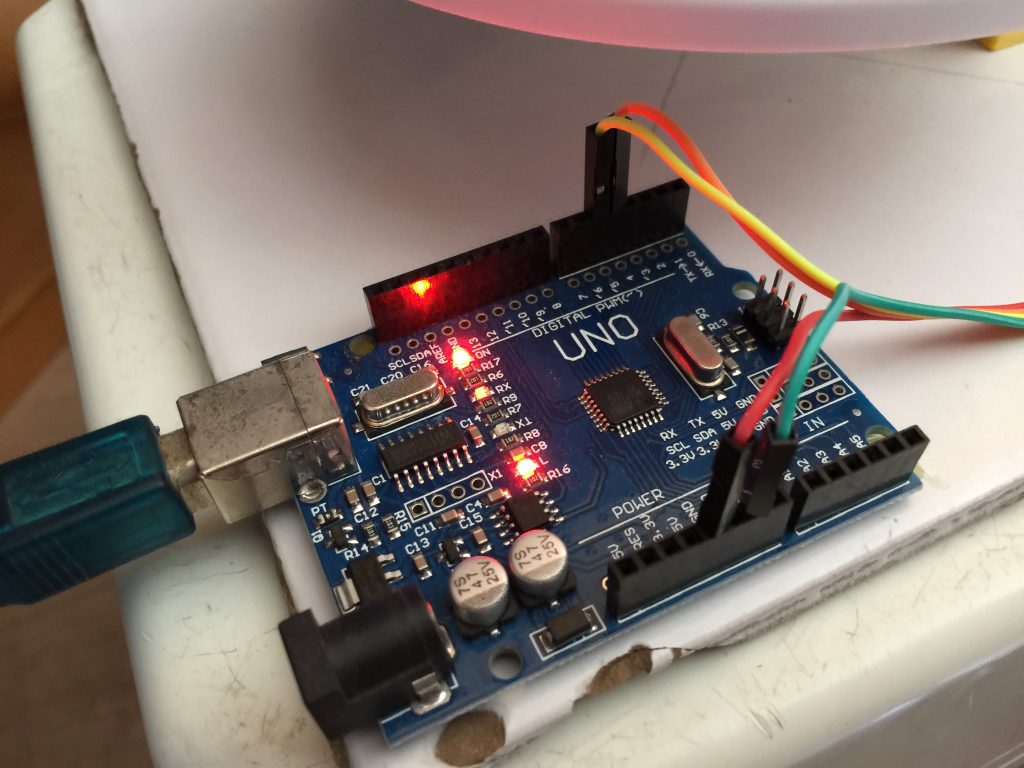

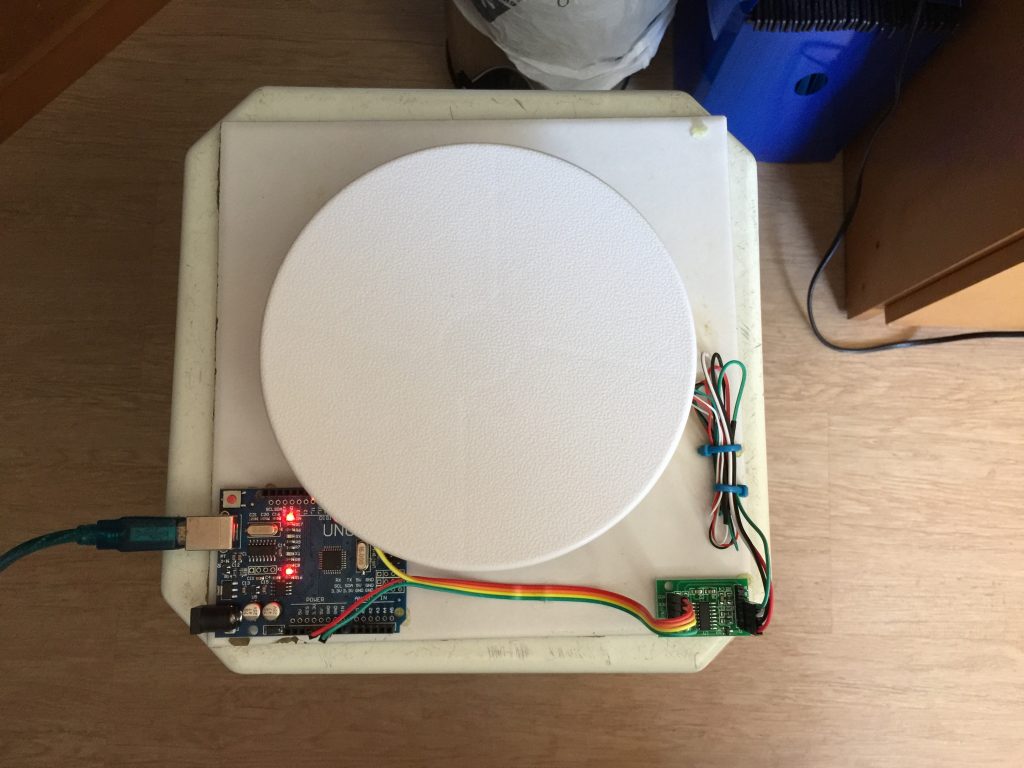

Figure 2 : Arduino board

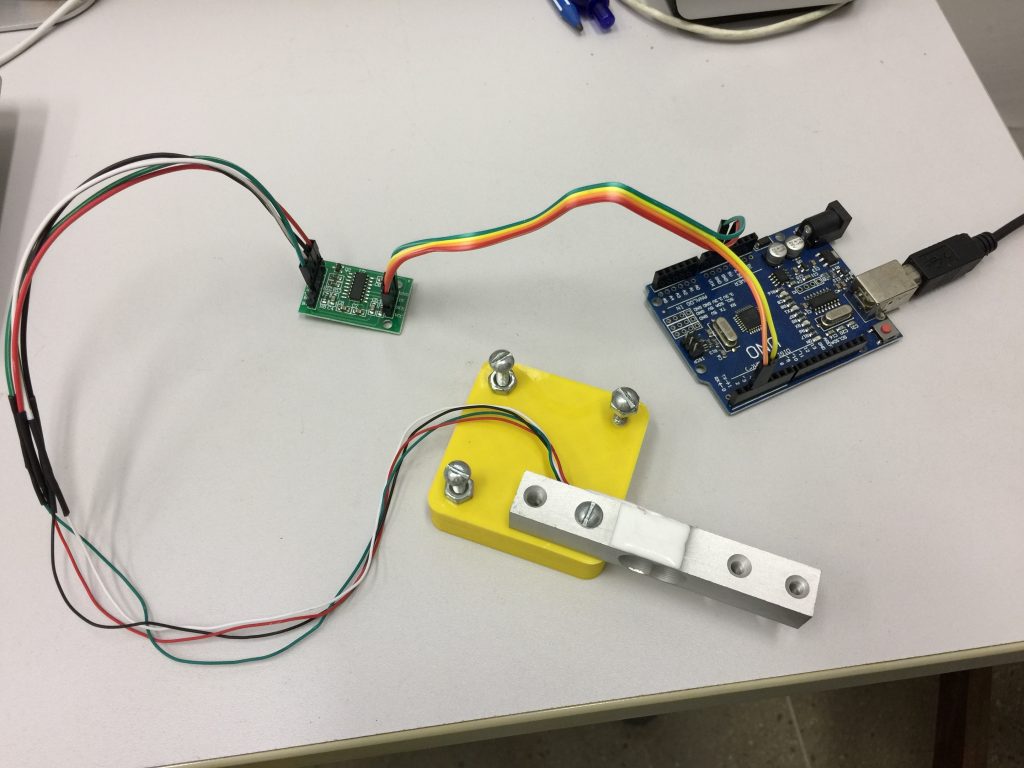

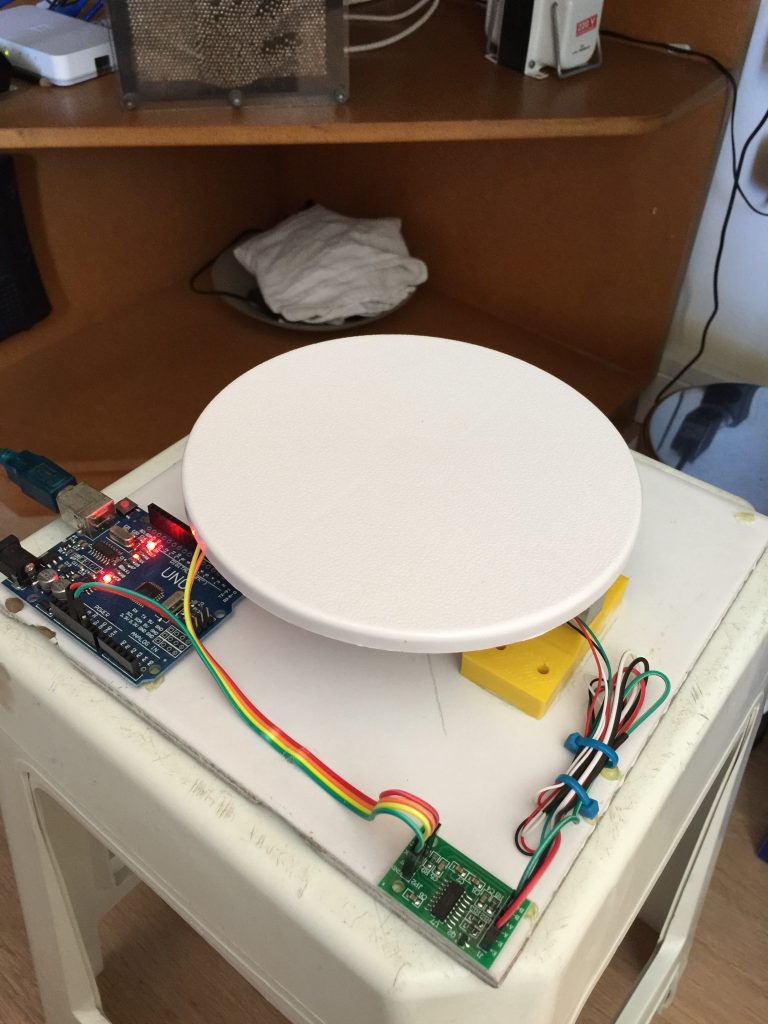

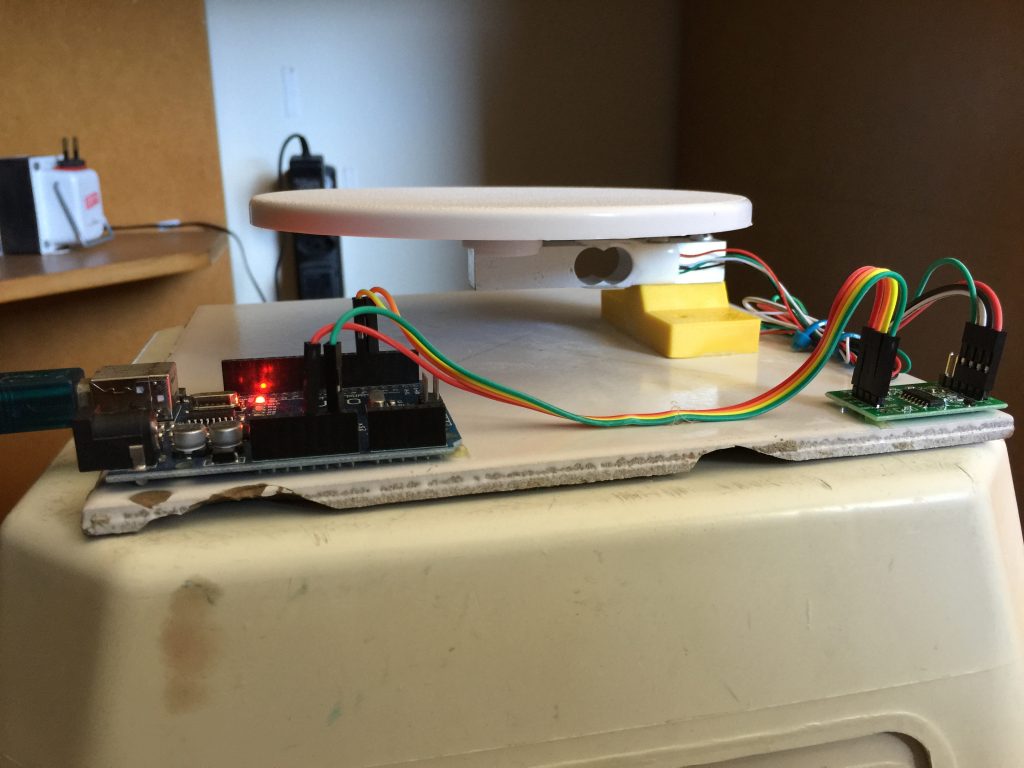

First I had to make the digital scale, so I used a load cell that had the right range for this project. The weight of the urine would range from 0 to 300~500mg more or less, so I acquire a 2Kg range load cell (Figure 3 and 4). I disassemble an old kitchen scale to use the “plate” that had the right space to hold the load cell and 3D printed a support in a heavy base to avoid errors in the measurements due to the flexing of the base. For that, I used a heavy ceramic tile that worked very well. The cell “driver” was an Arduino library called HX711. There is a cool youtube tutorial showing how to use this library [1].

Figure 2 : Arduino board

First I had to make the digital scale, so I used a load cell that had the right range for this project. The weight of the urine would range from 0 to 300~500mg more or less, so I acquire a 2Kg range load cell (Figure 3 and 4). I disassemble an old kitchen scale to use the “plate” that had the right space to hold the load cell and 3D printed a support in a heavy base to avoid errors in the measurements due to the flexing of the base. For that, I used a heavy ceramic tile that worked very well. The cell “driver” was an Arduino library called HX711. There is a cool youtube tutorial showing how to use this library [1].

Figure 3 : Detail of the load cell used to make the digital scale.

Figure 3 : Detail of the load cell used to make the digital scale.

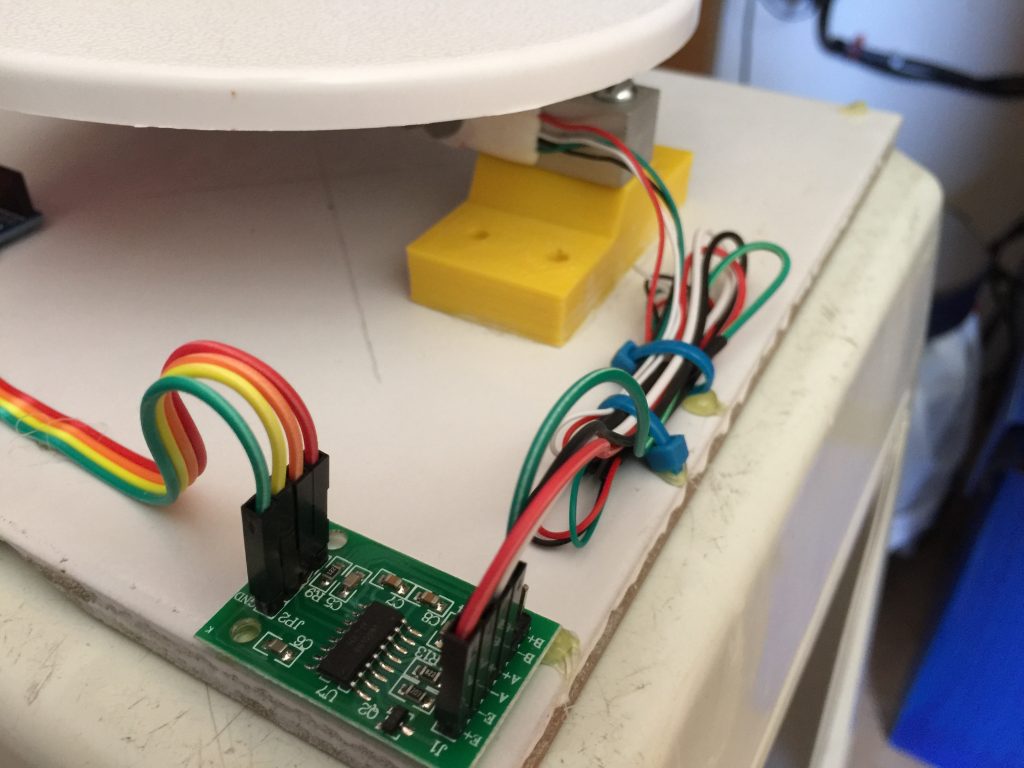

Figure 4 : Loadcell connected to the driver HX711

The next problem was how to connect to the computer!!! This is not a regular project that you can assemble everything on your office and do tests. Remember that I had do pee on the hardware! I also didn’t want to bring a notebook to the bathroom every time I want to use it! the solution was to use a wifi module and communicate wirelessly. Figure 5 shows the module I used. Its an ESP8266. Now I could bring my prototype to the bathroom and pee on it while the data would be sent to the computer safely.

Figure 4 : Loadcell connected to the driver HX711

The next problem was how to connect to the computer!!! This is not a regular project that you can assemble everything on your office and do tests. Remember that I had do pee on the hardware! I also didn’t want to bring a notebook to the bathroom every time I want to use it! the solution was to use a wifi module and communicate wirelessly. Figure 5 shows the module I used. Its an ESP8266. Now I could bring my prototype to the bathroom and pee on it while the data would be sent to the computer safely.

Figure 5 : ESP8266 connection to the Arduino board.

Figure 5 : ESP8266 connection to the Arduino board.

Figure 6 : More pictures of the prototype

Once built, I had to calibrate it very precisely. I did some tests to measure the sensibility of the scale. I used an old lock and a small ball bearing to calibrate the scale. I went to the laboratory of Metrology at my university and a very nice technician in the lab (I’m so sorry I forgot his name, but he as very very kind) measures the weights of the lock and the ball bearing in a precision scale. Then I started to do some tests (see video bellow)

The last thing to make the prototype 100% finished was to have a funnel that had the right size. So I 3D printed a custom size funnel I could use (figure 7).

Figure 6 : More pictures of the prototype

Once built, I had to calibrate it very precisely. I did some tests to measure the sensibility of the scale. I used an old lock and a small ball bearing to calibrate the scale. I went to the laboratory of Metrology at my university and a very nice technician in the lab (I’m so sorry I forgot his name, but he as very very kind) measures the weights of the lock and the ball bearing in a precision scale. Then I started to do some tests (see video bellow)

The last thing to make the prototype 100% finished was to have a funnel that had the right size. So I 3D printed a custom size funnel I could use (figure 7).

Figure 7: Final prototype ready to use

Once calibrated and ready to measure weights in real time, It was time to code the flow calculation. At first, this is a straight forward task, you just differentiate the measurements in time. Something like

$$

\begin{equation}

f[n] = \frac{{w[n – 1] – w[n]}}{{\rho \Delta t}}

\end{equation}

$$

Where \(f[n]\) and \(w[n]\) are the flow and weight at time \(n\). \(\rho\) is the density and \(\Delta t\) is the time between measurements.

Figure 7: Final prototype ready to use

Once calibrated and ready to measure weights in real time, It was time to code the flow calculation. At first, this is a straight forward task, you just differentiate the measurements in time. Something like

$$

\begin{equation}

f[n] = \frac{{w[n – 1] – w[n]}}{{\rho \Delta t}}

\end{equation}

$$

Where \(f[n]\) and \(w[n]\) are the flow and weight at time \(n\). \(\rho\) is the density and \(\Delta t\) is the time between measurements.

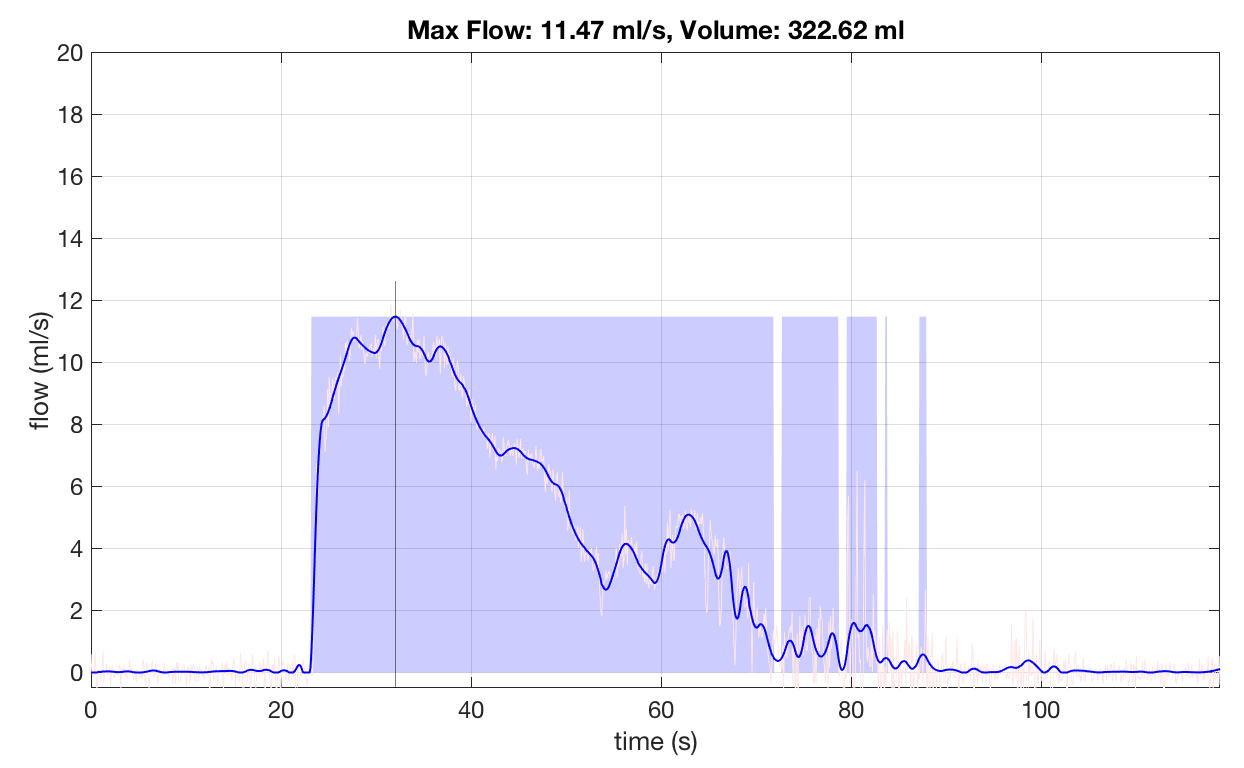

Figure 8 : Result of a typical exam done with the prototype.

The blue line is the filtered plot. The raw flow can be seen in light red. The black line tells where the max flow is and the blue regions estimates the non-zero flow (if you get several blue regions it means you stop peeing a lot in the same “go”). In the top of the plot you have the two numbers that the doctor is interested: The max flow and the total volume.

With everything working, it was time to use it! I spent 40 days using it and collected around 120 complete exams. Figure 9 shows all of them in one plot (just for the fun of it %-).

Figure 8 : Result of a typical exam done with the prototype.

The blue line is the filtered plot. The raw flow can be seen in light red. The black line tells where the max flow is and the blue regions estimates the non-zero flow (if you get several blue regions it means you stop peeing a lot in the same “go”). In the top of the plot you have the two numbers that the doctor is interested: The max flow and the total volume.

With everything working, it was time to use it! I spent 40 days using it and collected around 120 complete exams. Figure 9 shows all of them in one plot (just for the fun of it %-).

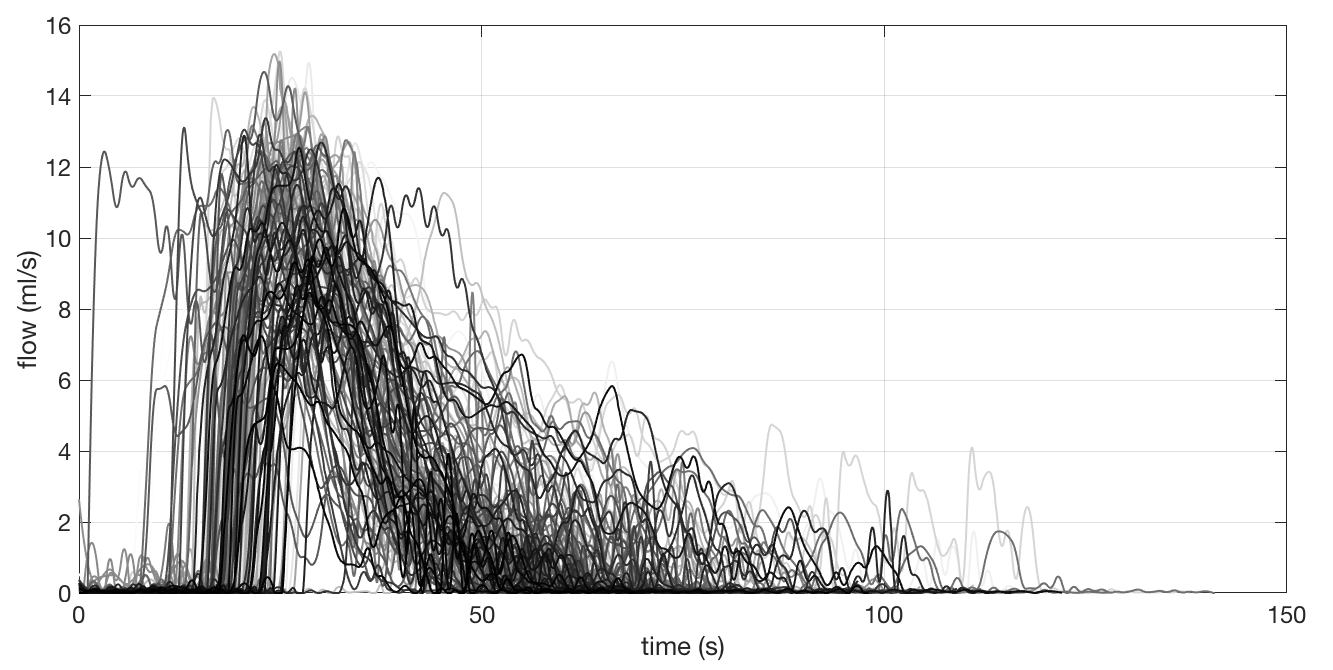

Figure 9 : 40 days of exams in one plot

Obviously, this plot is useless. To just show a lot of exams does not mean too much. Hence, what I did was to do the exams for some days without the medicine and then with the medicine. Then I separated only the max flow for each exam and plotted over time. Also, I computed the mean and the standard deviation for the points with and without the medicine and plotted in the same graph. The result is showed in figure 10.

Figure 9 : 40 days of exams in one plot

Obviously, this plot is useless. To just show a lot of exams does not mean too much. Hence, what I did was to do the exams for some days without the medicine and then with the medicine. Then I separated only the max flow for each exam and plotted over time. Also, I computed the mean and the standard deviation for the points with and without the medicine and plotted in the same graph. The result is showed in figure 10.

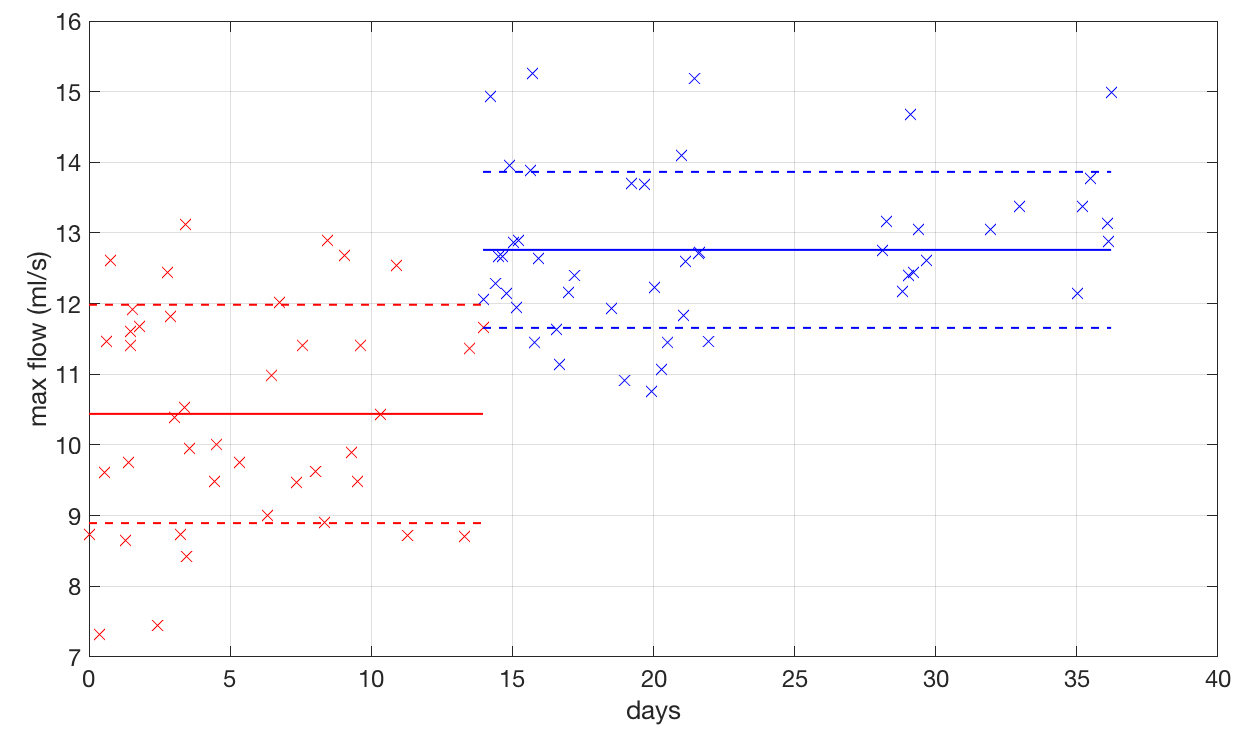

Figure 10 : Plot of max flow versus time. Red points are the results of max flow whiteout the medicine. The blue ones are the result with the medicine.

Well, as you can see, the medicine works a bit for me! The average for each situations is different and they are within more or less one standard deviation from each other. However, with this plot you can see that some times, even with the medicine, I got some low values… Those were the days I was not at my best for taking the water out of my knee. On the other hand, at some other days, even without the medicine, I was feeling like the bullet that killed Kennedy and got a good performance! In the end, statistically, I could prove that indeed the medicine was working.

Figure 10 : Plot of max flow versus time. Red points are the results of max flow whiteout the medicine. The blue ones are the result with the medicine.

Well, as you can see, the medicine works a bit for me! The average for each situations is different and they are within more or less one standard deviation from each other. However, with this plot you can see that some times, even with the medicine, I got some low values… Those were the days I was not at my best for taking the water out of my knee. On the other hand, at some other days, even without the medicine, I was feeling like the bullet that killed Kennedy and got a good performance! In the end, statistically, I could prove that indeed the medicine was working.

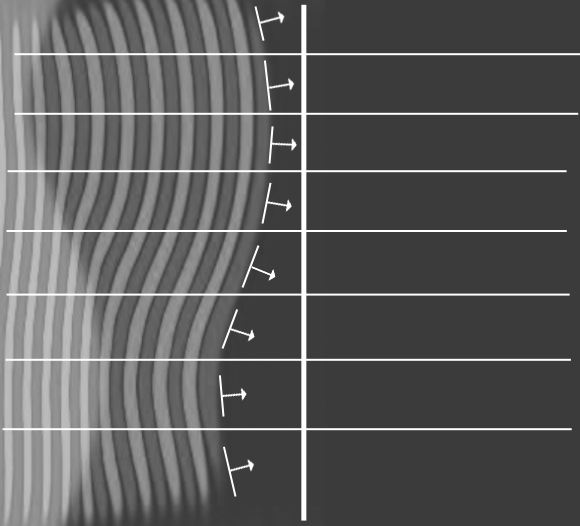

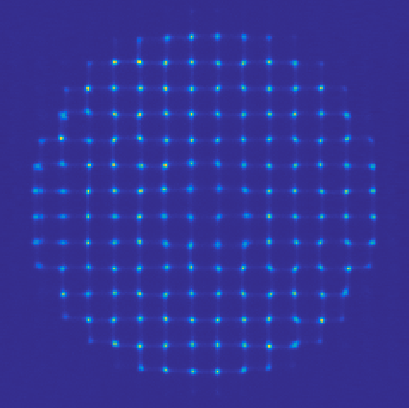

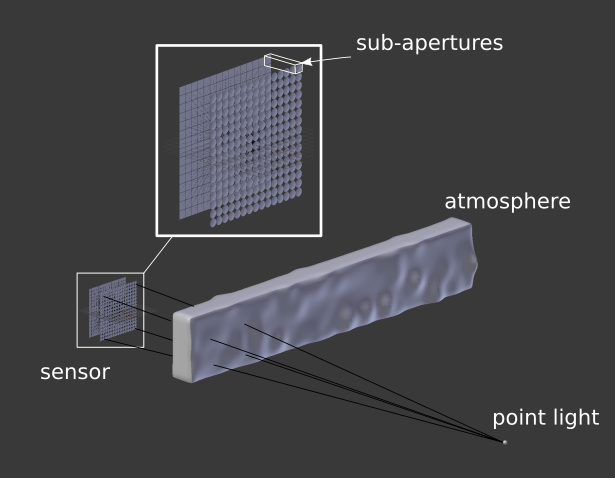

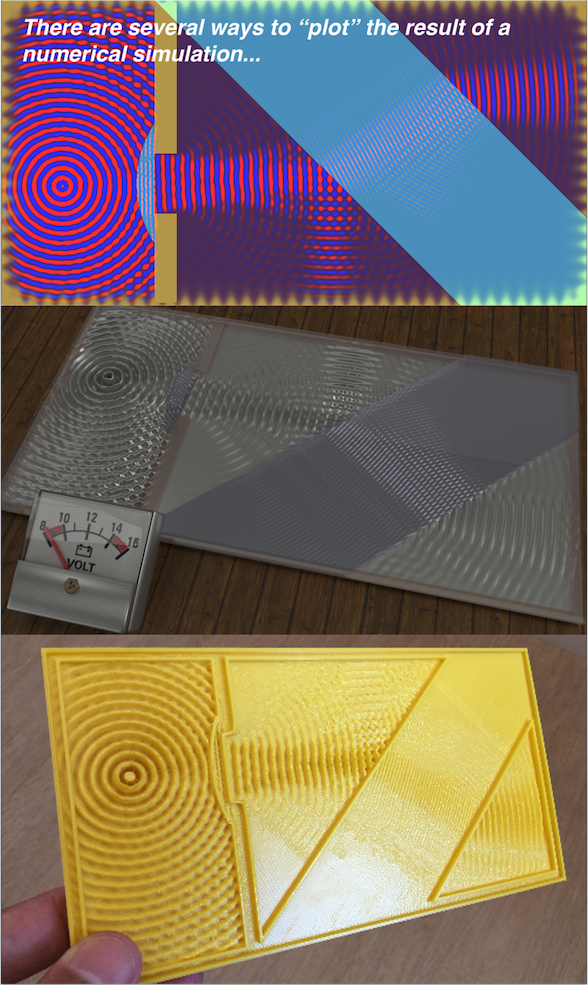

Figure 3: The ways you can visualize the solution to Maxwell’s equation for a boundary condition involving a source, a lens and a tilted glass block.

Figure 3: The ways you can visualize the solution to Maxwell’s equation for a boundary condition involving a source, a lens and a tilted glass block.

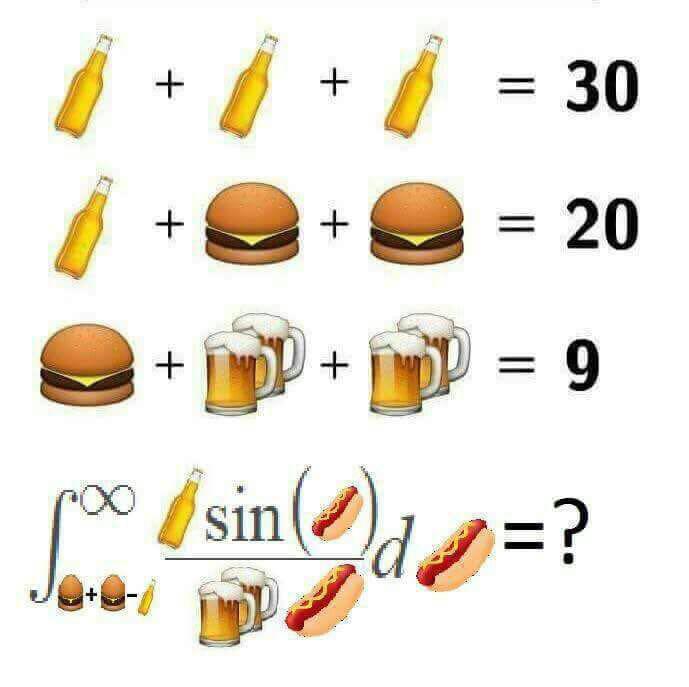

The beginning of the meme is, as you most probably know, those famous “linear system of equations with emojis as variable names”. Fun fact about those memes: Most of them present systems that are quasi triangular, and hence, very very easy to solve. Anyways… In general, they give you 3 or 4 equations with right hand side values and asks you to compute a 4th or 5th equation that is, in general, another linear combination of the variables.

The beginning of the meme is, as you most probably know, those famous “linear system of equations with emojis as variable names”. Fun fact about those memes: Most of them present systems that are quasi triangular, and hence, very very easy to solve. Anyways… In general, they give you 3 or 4 equations with right hand side values and asks you to compute a 4th or 5th equation that is, in general, another linear combination of the variables.